Navigating AI Governance and Assurance: The case for ISO/IEC 42001 AI Management System Certification

This white paper outlines the need for and how your organization can develop, govern and continuously comply with both internal and external requirements to advance successful implementation of AI in processes, products and services.

Artificial intelligence (AI) is transforming the world and creating new opportunities and challenges for businesses and society at large. With the arrival of Generative AI ChatGPT and Copilot, experimenting and first use of AI in organisations have exploded. Successful implementations show that AI can fuel efficiency, quality, innovation and customer satisfaction. However, every new technology comes with its risks. AI is ripe with potential challenges and uncertainties that need to be managed and mitigated for safe, reliable, and ethical development, application and use.

Here the role of an AI Management System (AIMS) based on the ISO/IEC 42001 standard becomes essential for organizations to manage their AI journey. A structured approach forces you to “think before you act” and continually assess performance and outcomes. This foundational standard on AI management, risk management approach and third-party certification is already recognized as a core pillar to guide development and deliver trusted AI solutions.

The need for safe and responsible use of AI

The need for reliable AI is driven by the increased experimentation and adoption of its many applications, the growing awareness and expectations of stakeholders, and the evolving ethical and regulatory landscape. Consequently, the benefits of AI cannot be realised by organizations unless the trust gap between the developers and users of AI is bridged. The trust gap refers to the potential lack of confidence and transparency in your organization’s AI enabled products and/or services. Where it is unclear to users how AI systems make decisions, for example, this leads to uncertainty and skepticism around safety, ethical considerations, data privacy protection and overall credibility. Simply put, our trust in a technology is predicated on our ability to fully understand its uses and gain assurance that it is safe and reliable.

Bridging this trust gap is essential to commercialize and scale AI products and services. It requires that you ensure safe, responsible, reliable and ethical implementation of AI. Moreover, assurance thereof must be provided both to internal stakeholders, such as your employees and senior management, and external stakeholders like customers and shareholders.

Achieving and demonstrating trustworthiness of your solutions requires a systematic approach covering the entire AI lifecycle from stakeholder analysis, proper guard rails (like ethical guidelines), use case prioritization and risk identification, all the way up to implementation of relevant controls. As we zoom in on the trust gap, clear needs and expectations can be identified. For example, your organization should:

- Identify and prioritise the areas and applications where AI can add value and create impact and understand the benefits and risks.

- Establish and maintain a culture of trust and transparency and accountability within organizations.

- Assess and measure the performance and quality of AI systems, and to ensure alignment with the trustworthiness principles, existing or upcoming legislation and customer requirements.

- Organize roles and responsibilities in organizations, which is especially critical in a technology domain that continues to evolve and develop.

- Adopt and implement processes based on best practice standards (e.g. ISO/IEC 42001) for AI development, implementation and governance.

- Communicate the trustworthiness of AI systems to stakeholders, providing evidence and assurance of compliance with best practices, laws and regulations.

While not exhaustive, this is an outline of core needs and expectations. The Artificial Intelligence Management System standard ISO/IEC 42001 is designed to provide guidance and structure to effectively navigate these.

Role of standardization in governing AI

Every new technology comes with its risks. The ever-changing landscape of AI coupled with the enormous potential of its application across industries fuels this even more. To predict all outcomes at the design phase is impossible, for example.

It is within this context that management system standards like ISO/IEC 42001 become especially important. Whenever there is a need for public trust, standardization and certification play a pivotal role. Alongside legal, regulatory and ethical considerations, standardization provides a vehicle for enabling scalability, embedding safety and guiding organizations into the unknown territory which AI currently is.

Standards establish essential terminology, promote industry-led norms, and capture best practices for assessment and improvement. Bringing clarity and responsibility, standards targeting AI management will greatly aid societal acceptance. They offer a foundation for regulatory compliance and industry adoption and accelerate the ability to harness AI’s global potential in a safe, responsible and ethical way and ensure needed transparency, trust and security among users and other stakeholders.

Role of AI Management Systems

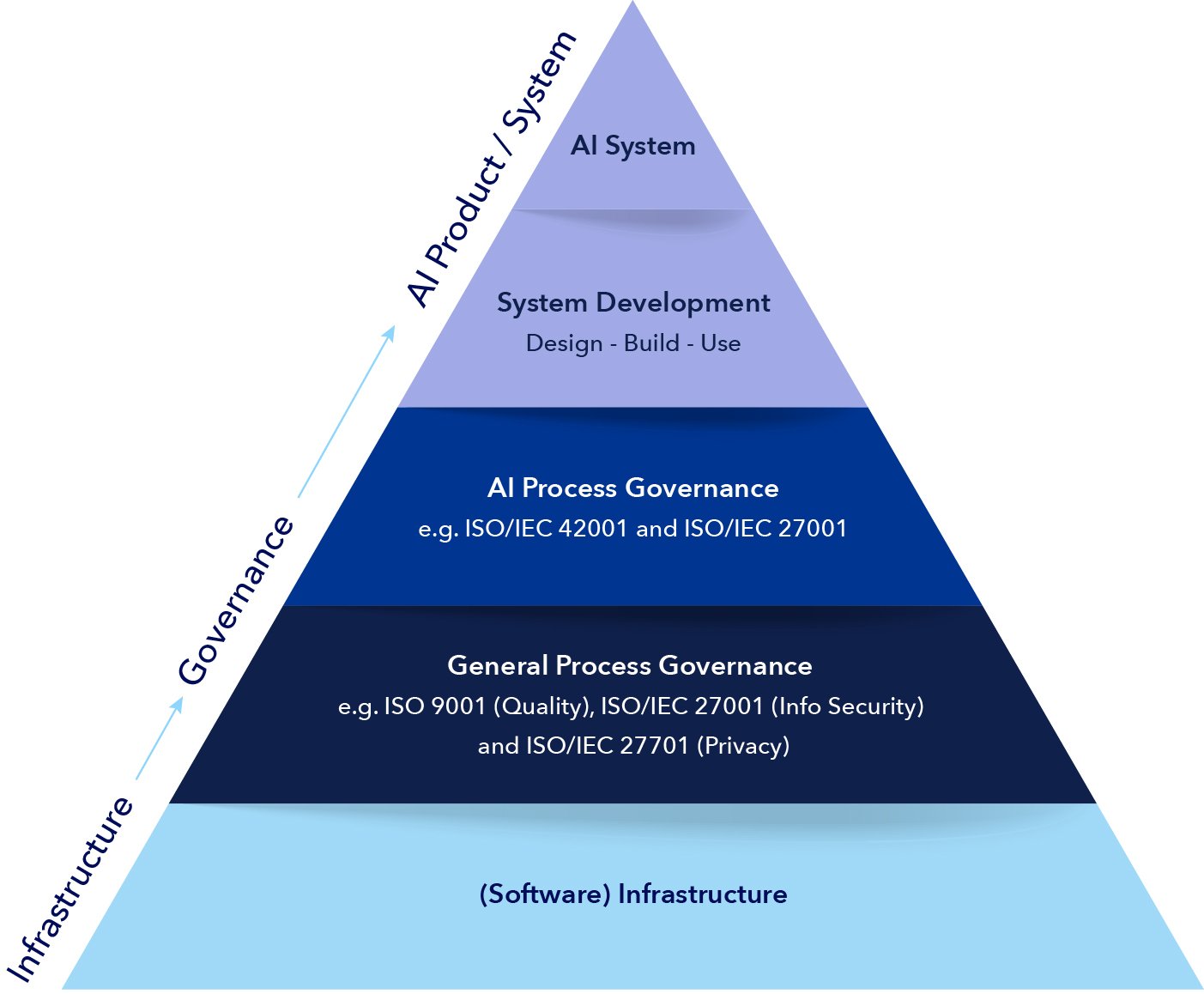

Management system standards and certification can act as a solid foundation for organizations to assemble their governance around AI (see figure 1). The international standard for AI management systems ISO/IEC 42001 provides guidance and requirements for organizations that develop, deploy or use AI systems.

This highly anticipated standard was published at the end of 2023 and is applicable and intended for use across industries and by organizations of any type and size involved in developing, providing or using products and/or services that utilize AI systems. Being an ISO standard, it is compatible with and complementary to other classic ISO management system standards, such as ISO 9001 (quality) and ISO/IEC 27001 (information security). ISO/IEC 42001 strikes a balance between fostering AI innovation and implementing effective governance.

This means that you can draw on and build from any existing management system and general process governance. It ensures a comprehensive approach to govern how you make AI an integral part of products, services or even internal applications.

In the schematic below we position the ISO/IEC 42001 as a process governance tool in the ‘pyramid of assurance’. At the pyramids foundation you can find the organizational basic infrastructure. All that need to be in place for the organization to function. In order to govern and improve, many organisations choose to implement management systems compliant to quality (ISO 9001), information security (ISO/IEC 27001) or any other management system standard. Certification is then the means to demonstrate compliance to various stakeholders.

As ISO/IEC 42001 follows a similar structure to the other ISO management system standards, it facilitates to complement your management system with the AI management system requirements. In this way your process governance forms the foundation to design, build and use trustworthy AI systems.

The main objectives of ISO/IEC 42001 are to:

- Provide a framework and methodology to establish, implement, maintain and improve an AI management system, covering the entire AI life cycle. Where it takes a risk based approach.

- Enable demonstration and communication of the trustworthiness of an organization’s AI systems and processes.

- Aid regulatory and legal compliance, which will become increasingly critical with rising national and international regulations such as the European AI Act already in force since August 2024.

- Facilitate the interoperability and integration of AI systems and processes.

- Promote collaboration and coordination among stakeholders.

- Support innovation and improvement of AI systems and processes.

- Encourage adoption and dissemination of best practices and standards on AI management.

The relationship between ISO/IEC 42001 and AI laws and regulations is expected to be synergetic and mutually beneficial. While the EU AI Act has a marked product focus, adopting an AI Management System provides a foundation on which to build and deliver consistent and predictable trusted AI systems, the controls of the standard are in line with what the regulations require to comply with.

Ultimately, a well implemented and compliant management system to all relevant standards will ensure integrity, privacy and ethical use of data whilst being adaptable enough to integrate ever changing requirements stemming from customers, technology advancements, legal and regulatory instances and so on.

The way forward?

To start the process of adopting a management system certified to ISO/IEC 42001, you can use DNV's article on “8 steps to certification of ISO/IEC 42001 management system” and the self-assessment tool that comes with it as guidance. The process starts with a senior management review to identify the main reasons for adopting an AI management system (AIMS). After this, organizations should get the ISO/IEC 42001 standard and set a strategic direction that matches the identified goals.

The following steps include planning and resource allocation, understanding and mapping key processes, and identifying training needs for staff. Preparing, developing and implementing applicable process and procedures are typical steps, and it is important to apply internal audits and management reviews checking the system's effectiveness along the way. Using DNV's self-assessment tool will help identify gaps against the standard requirements and will enable a focused approach to meet the ISO/IEC 42001 requirements.

Why choose DNV?

As a world leading certification body, DNV is the chosen partner for 80,000 companies worldwide for their management system certification and training needs. We have unique competences, industry knowledge and capabilities on relevant to AI and other management systems. When choosing DNV, you get:

- Global player in information security and privacy assurance: We have a robust track record within information security and privacy certification and training, which is crucial to integrate into your AI management.

- Risk Based Certification™: We employ a proactive risk-based approach to certification, which means we can help organizations identify potential risks in their AI systems early on to ensure they are addressed before becoming an issue.

- Lumina™: This is our data-smart benchmarking tool that provides deeper insights and analytics into the management system, from most common failures and fixes to a full overview of all company sites and comparisons against peers.

- Training management: Digital solutions to help your company manage and ensure consistent awareness and competence development of employees across your whole organization.

- Competent auditors who take a pragmatic approach listen to the customer's need whilst taking care that compliance to the standard is assessed.

- Customer management processes structured to deliver a superior customer experience.

DNV is committed to provide reliable and value-adding AI assurance services. Building on our independence, third party role and principle to not compromise on quality and integrity, we partner with customers to help them navigate the complexities of AI and related challenges with confidence and ensure necessary governance to provide IA systems that are trusted to be safe, reliable and secure.